Efficient Convolutional Neural Networks for Mobile Vision Applications

Introduction

Convolutional neural networks have become ubiquitous in computer vision ever since AlexNet popularized deep convolutional neural networks by winning the Ima- geNet Challenge: ILSVRC 2012 . The general trend has been to make deeper and more complicated networks in order to achieve higher accuracy. How- ever, these advances to improve accuracy are not necessar- ily making networks more efficient with respect to size and speed. In many real world applications such as robotics, self-driving car and augmented reality, the recognition tasks need to be carried out in a timely fashion on a computation- ally limited platform.

This paper describes an efficient network architecture and a set of two hyper-parameters in order to build very small, low latency models that can be easily matched to the design requirements for mobile and embedded vision ap- plications. Section 2 reviews prior work in building small

models. Section 3 describes the MobileNet architecture and two hyper-parameters width multiplier and resolution mul- tiplier to define smaller and more efficient MobileNets. Sec- tion 4 describes experiments on ImageNet as well a variety of different applications and use cases. Section 5 closes with a summary and conclusion.

Prior Work

There has been rising interest in building small and effi- cient neural networks in the recent literature, e.g. Many different approaches can be generally categorized into either compressing pretrained networks or training small networks directly. This paper proposes a class of network architectures that allows a model devel- oper to specifically choose a small network that matches the resource restrictions (latency, size) for their application. MobileNets primarily focus on optimizing for latency but also yield small networks. Many papers on small networks focus only on size but do not consider speed.

MobileNets are built primarily from depthwise separable convolutions initially introduced in and subsequently used in Inception models to reduce the computation in the first few layers. Flattened networks build a network out of fully factorized convolutions and showed the poten- tial of extremely factorized networks. Independent of this current paper, Factorized Networks introduces a similar factorized convolution as well as the use of topological con- nections. Subsequently, the Xception network demon- strated how to scale up depthwise separable filters to out perform Inception V3 networks. Another small network is Squeezenet which uses a bottleneck approach to design a very small network. Other reduced computation networks include structured transform networks and deep fried convnets .

A different approach for obtaining small networks is shrinking, factorizing or compressing pretrained networks. Compression based on product quantization , hashing, and pruning, vector quantization and Huffman coding

have been proposed in the literature. Additionally var- ious factorizations have been proposed to speed up pre- trained networks . Another method for training small networks is distillation which uses a larger net- work to teach a smaller network. It is complementary to our approach and is covered in some of our use cases in section 4. Another emerging approach is low bit networks .

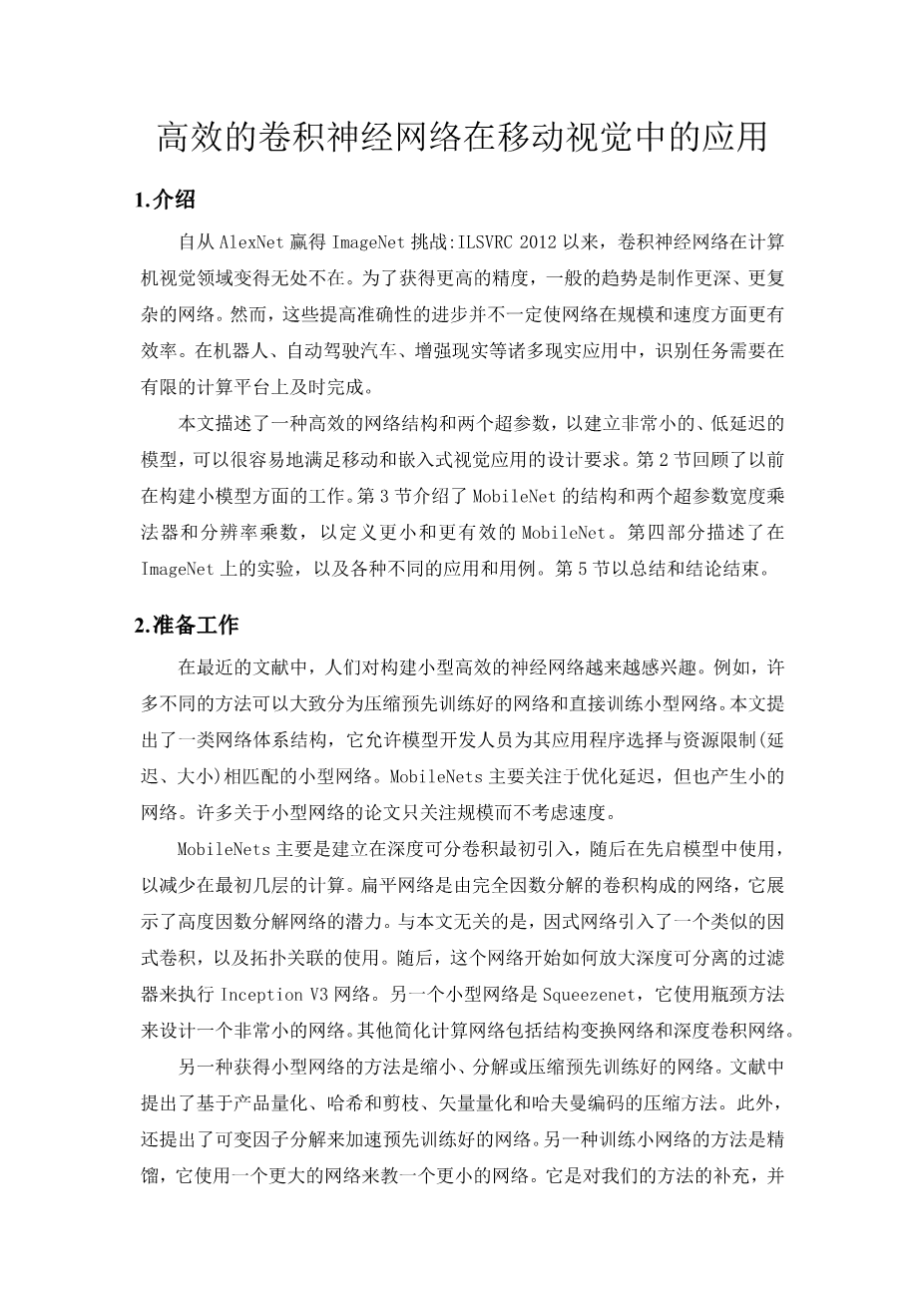

Figure 1. MobileNet models can be applied to various recognition tasks for efficient on device intelligenc

Photo by Juanedc (CC BY 2.0)

Photo by HarshLight (CC BY 2.0)

Face Attributes

Landmark Recognition

MobileNets

Google Doodle by Sarah Harrison

Photo by Sharon VanderKaay (CC BY 2.0)

MobileNet Architecture

In this section we first describe the core layers that Mo- bileNet is built on which are depthwise separable filters. We then describe the MobileNet network structure and con- clude with descriptions of the two model shrinking hyper- parameters width multiplier and resolution multiplier.

Depthwise Separable Convolution

The MobileNet model is based on depthwise separable convolutions which is a form of factorized convolutions which factorize a standard convolution into a depthwise convolution and a 1 1 convolution called a pointwise con- volution. For MobileNets the depthwise convolution ap- plies a single filter to each input channel. The pointwise convolution then applies a 1 1 convolution to combine the outputs the depthwise convolution. A standard convolution both filters and combines inputs into a new set of outputs in one step. The depthwise separable convolution splits this into two layers, a separate layer for filtering and a separate layer for combining. This factorization has the effect of drastically reducing computation and model size. Figure 2 shows how a standard convolution 2(a) is factorized into a depthwise convolution 2(b) and a 1 1 pointwise convolu- tion 2(c).

times;

times;

A standard convolutional layer takes as input a DF times; DF times; M feature map F and produces a DF times; DF times; N feature map G where DF is the spatial width and height of a square input feature map1, M is the number of input channels (input depth), DG is the spatial width and height of a square output feature map and N is the number of output channel (output depth).

The standard convolutional layer is parameterized by convolution kernel K of size DK times; DK times; M times;N where DK is the spatial dimension of the kernel assumed to be square and M is number of input channels and N is the number of output channels as d

剩余内容已隐藏,支付完成后下载完整资料

英语译文共 13 页,剩余内容已隐藏,支付完成后下载完整资料

资料编号:[410042],资料为PDF文档或Word文档,PDF文档可免费转换为Word